The Coverage Block

From Pirate Cinema: Volume 3 of the New Machine Cinema

Before directing my first feature film in 2014, a cinematographer gave me this simple advice: wide, medium, and close-up, and the scene will work. Doesn’t matter what the scene is. You get the coverage, you’ve got the scene. This of course becomes augmented with whatever you come up with, and one certainly should go around those bounds at the risk of creative stagnation.

But the point being, each scene must be covered across multiple angles to create natural filmic editing. It is a formula that if you utilize, will work no matter any other factor.

I have noticed a pattern I have found in AI cinema where directors seem to be afraid of capturing scenes through coverage, more they have not been found by this cinematographer. It is a natural instinct, having your image, your first instinct is to animate the image and then formulate the movie from master shots.

Now the bones of this essay formulated when I kept hearing a misconception that environmental consistency has not been solved yet with AI.

The truth is, the means for classical film grammar has been emerging piece meal from the very beginning of AI cinema in 2023. From the start, standard filmic coverage was always possible, just without environmental consistency.

When AI video evolved into basic text to video, you were at the mercy of the video algorithms and had to rely on the varied environments and characters to blend together. Filmmakers at the time did not even bother with coverage. They went with what the rudimentary tools could do, which tended to be plain montage with voice-over–a succession of scenes constituting a greater implication, without textures in cohesion.

However I was intent on treating the tools, though rudimentary, no different than a film set; I used a script I wrote for tradfilm and cast with live action actors, before deciding it would be AI’s first feature film; Window Seat. And I decided to tell this story with only AI tools as they stood, planning on basic coverage, largely close-ups with two-shots sprinkled in, and the aesthetic of a 16mm black and white indie film. During the extremely arduous process, I learned how to sell the illusion of cinema.

It took a certain leap of faith in understanding the way people watch films is not to place environments under a microscope. They are too busy watching the plot, the characters, the dialogue, the feeling.

By late 2023, you no longer had to rely on the randomness of whoever your AI system hallucinates. You could now animate still images.

In 2024, I began my second AI feature film, history’s first animated feature film made entirely with artificial intelligence, DreadClub: Vampire’s Verdict. Character consistency was fully solved part way into production with the MidJourney character reference command called –cref; meaning you are now getting the exact same character in every still image you generate. You place it in the AI software, then animate. But the problem remained, no environmental consistency.

My creative workarounds evolved–I sold the film with rapid micro-cuts, black and white, and frantic camera movement. I needed the sound and fury and aesthetic of the film to drown its limitations. There were also now camera motion controls–push ins, zooms, pans, tilts.

In 2025, with my third AI feature, A Very Long Carriage Ride, a new tool called first frame / last frame was implemented, allowing you to tell the algorithm the exact image you want to start with, and the exact image you want to end with, and it will animated them in between. But I took to this backward, I would animate a starting frame, then use it to create more starting frames–with the character in different poses.

This was a breakthrough, affording a feature film actual performance staging on screen. You could get any motion you needed. You see you can generate a character walking. Freeze the frame. And have them end up exactly where you need them to land. (The freeze frames created this magic charm akin to early cinema hand cranked cameras, where you can spot the exact cut.)

Many limitations from the prior films had now been addressed in A Very Long Carriage Ride; instead of rapid one second micro-cuts, there were now thirty second long takes; there was almost no reliance on montage, the entire film unfolded like a play, and lastly, it wasn’t only a series of close-ups, it had frequent three shots, four shots, even a ten shot.

But there was still one problem. Environmental consistency, as the critics said, had not been solved. Until I realized, yes it had. I had been using these tools without having the name for the methodology. In fact, environmental consistency had been solved well before DreadClub, with the invention of camera controls in late 2023.

This is what I call the Coverage Block. It’s very simple.

The Coverage Block is a single 2D image that encapsulates the full dimensions of a cinema scene.

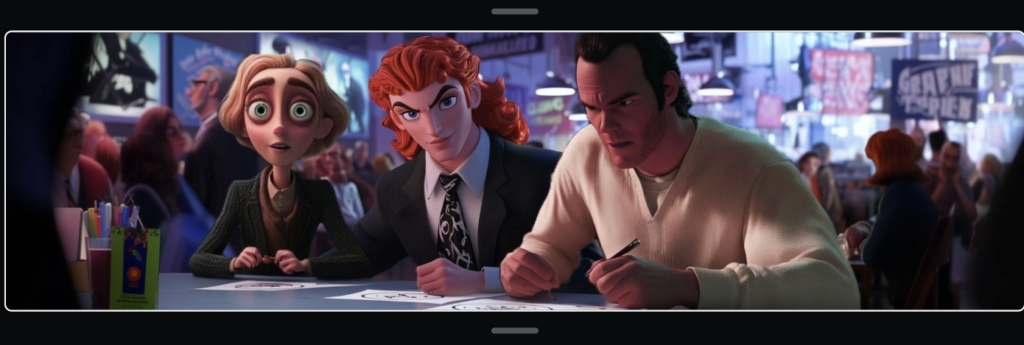

In my fourth AI feature, My Boyfriend is a Superhero!?, I took full advantage of environmental consistency. I programmed camera motion and then took a screen grab where I directed the camera to land. This afforded me the perfect environmental continuity across the staging of a full scene.

By pushing in, tilting, panning, you are stealing every new angle that you need with screen grabs.

Start with your image.

Enhance it wider than what you need to get the full environment. (For the record, this enhancement feature had been offered in Midjourney starting mid-2024 and is now a mainstay AI tool.)

Composite the other characters together. Do your best to match the lighting with the poses you choose. But you will find that lighting discrepancies flatten once a character enters into a shared animated universe.

I like to keep it wide encapsulating approximately 2.5 16×9 frames, this allows one to use first frame / last frame panning across a single shot, while retaining environmental consistency.

Another illustration:

From this Coverage Block, I program camera motion into the shot. I can now grab perfect environmental consistency across the scene. Every angle I need:

Remember, AI offers what I call the infinite protocol—you can get one thousand shots at almost no cost. With the infinite protocol, because you can get more, you better. So for instance I offered A Very Long Carriage Ride two separate versions, one stop motion and one 2D animation, I call it, cinema’s first dual release (“One Film, Two Ways”). In My Boyfriend is a Superhero!? I made an entirely second protagonist allowing the audience to choose before they watch the film.

In tradfilm, everyone is fighting for baseline. In AI, you achieve baseline at the start, and now the question is, where do you place your focus? Traditional filmmakers simply never had the means to go past baseline. On a traditional film set, they are limited to some four or five angles of coverage per scene, with something like two or three scenes per day, all at a cost of something like one million dollars per day.

On AI, you are limited by nothing. All you need is your Coverage Block. A three dimensional scene encapsulated by one single image.

As when you have coverage, you have your scene, when you have the Coverage Block, you have your AI scene.

You have a one hour film, let’s say it consists of fifty scenes, each scene a little over one minute. All you need are fifty Coverage Blocks, and you have your film. It is essentially mapped with a series of complex still images. Consider it a three dimensional storyboard.

The Coverage Block now arrives as the formalization of the invisible habit: freezing the frame, exploring spatial consistency, treating coverage as post rather than production. The methodology emerged gradually, one tool at a time, where I journeyed across four films to tinker, evolve, and learn. A scene becomes like a puzzle that you can arrange any which way you want. And the best part is, as the cinematographer told me, whatever you imagine, it will work. Unlike a traditional film set, if you miss the shot, you will find yourself in the edit bay months later unable to fill in the gap, it is trivial now to go in and grab it.

Nothing fancy. No need to re-invent the wheel, and surely this will be streamlined: perhaps we will hand our AI agents our character models, costumes and a panoramic environment and ask for full coverage of the scene, all with one click.

Just a couple weeks after this post, Veo 3 added one click camera changes for any video you generate, streamlining the problem of coverage and environmental consistency for AI filmmaking.